By Abigail Turner-Franke, Dan Falvey, and Eric Torgerson

Maintaining high quality EM video while fishing is an important factor for keeping EM video review costs affordable and providing fishery managers with accurate data. High quality EM video is also fundamental to the development of machine learning capabilities that will further reduce review costs over the long term. That said, there is a lot going on when a vessel is actively fishing, with vessel/crew safety and efficiency of operations being the primary focus of the skipper and crew. To maintain high quality EM video in the complex, dynamic environment of a commercial fishing vessel, near real-time feedback to the vessel operator when EM video quality becomes degraded is crucial. The overall goal of this project was to find a way to improve image quality. We know that by developing a tool that can identify an image quality issue and alert a skipper, we can work toward producing more useable video data per trip for reviewers first, then fisheries managers.

Since 2010, the Alaska Longline Fishermen’s Association (ALFA) and the North Pacific Fisheries Association (NPFA) have been working with demersal longline and pot fixed gear vessels to develop practical EM solutions for fisheries monitoring in Alaska. The Alaska region fixed gear EM pool currently has approximately 170 vessels participating in a voluntary program where EM video is used to directly estimate discards for catch accounting purposes. In early 2020, fresh out of the National Electronic Monitoring Workshop in Seattle, where machine learning was a hot topic, ALFA’s Dan Falvey and NPFA’s Abigail Turner-Franke developed a pilot project to work with Chordata LLC’s Eric Torgerson to develop computer vision tools for the detection of EM video quality issues such as water drops, condensation and dirty lenses in real-time, onboard the vessel. Thanks to funding from the National Fish and Wildlife Foundation, this pilot project was launched and is showing promising results.

To start the project, ALFA and NPFA worked with vessel operators and the Pacific States  Marine Fisheries Commission (PSMFC) to assemble 827 video clips between 30 and 60 minutes in length, representing high, medium, and low image quality from five demersal longline vessels over a three-year period. The clips consisted of 389 with good image quality, 426 with medium image quality and 12 with low overall image quality (as reported by the primary reviewer). Specific causes of reduced image quality were varied, including water droplets, haze, dried salt crust, and lens flare caused by the sun and deck lighting. A substantial majority of issues were related to either water directly present on the lens, or haze and salt crust (residual from saltwater droplets), or condensation inside the camera.

Marine Fisheries Commission (PSMFC) to assemble 827 video clips between 30 and 60 minutes in length, representing high, medium, and low image quality from five demersal longline vessels over a three-year period. The clips consisted of 389 with good image quality, 426 with medium image quality and 12 with low overall image quality (as reported by the primary reviewer). Specific causes of reduced image quality were varied, including water droplets, haze, dried salt crust, and lens flare caused by the sun and deck lighting. A substantial majority of issues were related to either water directly present on the lens, or haze and salt crust (residual from saltwater droplets), or condensation inside the camera.

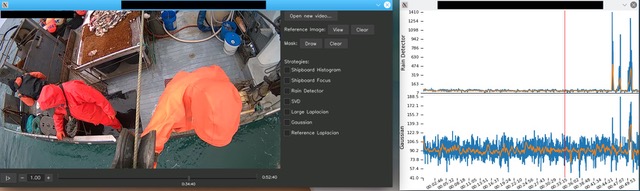

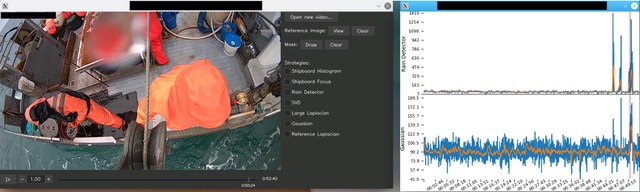

A number of image quality detection approaches were researched and experimented with as part of this project, with a “machine learning” model for detecting water droplets and a “difference of gaussians” approach for loss of focus or contrast identified as the two approaches most likely to bear fruit. These two approaches were then developed to a proof-of-concept level and evaluated using the sample EM video clips. Good baseline image quality was a key factor to train the algorithms on.

The “machine learning” model was trained against a large set of images where water droplets on camera lenses cause the image degradation. It is quite effective at identifying this type of issue, although its indication of the severity of the problem is often somewhat different than a human’s would be. Machine learning models are inherently difficult to “see inside,” so it is difficult to track down the causes of false positive and false negative detections, at least beyond the obvious cases, such as cameras with a large area of ocean in the frame. It is likely that additional training with an expanded set of images could improve the accuracy somewhat. One drawback of this approach is that it is computationally intensive – to run every frame of video in real time would require the addition of a separate graphics card (GPU) to the EM system, which would be expensive both in terms of money and energy use. However, only a slight loss of detection accuracy would result if a fraction (1 in 5 or 1 in 10) frames were processed by the detector. The “machine learning” droplet detector was consistent with expected results in approximately 95% of the video clips analyzed.

A second approach, based on a “difference of gaussians” algorithm, was also developed. It is more broadly applicable than the water droplet detector described above. It identifies areas of the image that have a loss of detail, and so can detect haze, salt crust, and lighting problems as well. The basic algorithm was refined to suppress detections of large areas that are completely uniform, such as large areas of sky at night. Even with these refinements, large areas of uniform color such as aluminum bait sheds or the sides of boats can trigger false detections. The difference of gaussians approach was consistent with expected results in about 90% of the clips analyzed and like the droplet detector, those clips with significant deviations from expected results were split almost evenly between false positives and false negatives.

Water droplets are by far the most common problem in this test dataset. They tend to exacerbate issues with lighting or glare, and when caused by saltwater spray, they leave behind haze and salt crust on the lens. Water droplets are also the most directly addressable cause of image quality issues, at least for cameras within easy reach of crew. It was notable that the primary reviewer indicated image quality issues as being more or less severe based on the impact to their review, and often relative to prior video clips from the same trip, rather than on an absolute scale. Perhaps, with further investigation and on-water testing we may be able to identify the root of many image quality issues more consistently before they become a larger issue.

The overall goal of this project was to find a way to improve image quality. We know that by developing a tool that can identify an image quality issue and alert a skipper, we can work toward producing more useable video data per trip for reviewers first, then fisheries managers. If this is something you find interesting or applicable for your region, the code developed in this project is open source. Please contact Dan Falvey of Alaska Longline Fishermen’s Association at 907-747-3400 or via email, or Abigail Turner-Franke of North Pacific Fisheries Association at 907-953-0929 or via email. This project report was a collaborative effort of Abigail Turner-Franke of NPFA, Dan Falvey of ALFA and Eric Torgerson of Chordata LLC.

Projects in the Field is a series of independently produced articles profiling work supported by NFWF’s Electronic Monitoring & Reporting Grant Program, and is meant to raise awareness and support for these important initiatives. To submit an article for this series, please contact us at info@em4.fish.