One major challenge to the growth of electronic monitoring worldwide is how to efficiently review massive quantities of video data. In the absence of new techniques, the industry may soon be burdened with manual review of millions of hours of footage per year. By now, many in the fishtech world have heard of the potential of automating much of this review with artificial intelligence (AI), but we’ve yet to see widespread tangible gains from AI deployment in the real world. Why is this, and what is necessary for these technologies to actually make a positive impact on EM? Over the last year, our company has begun to answer these questions.

Thanks to support from the NFWF Electronic Monitoring and Reporting grant program, in 2020 we began development of acollection of machine learning tools directly targeted at increasing the efficiency of EM review in longline fisheries. Our aim was to perform an in-depth evaluation of AI methods for fisheries data review, and to prototype a software interface allowing video reviewers to use AI to speed up their workflows. The project was conducted in collaboration with a diverse collection of stakeholders in Hawaii’s tuna and swordfish longline fishery, and has resulted in partnerships in other fisheries around North America. It has laid the groundwork for a system that will save reviewers time and money, add value for captains, and — perhaps most importantly — reduce bottlenecks in the review process.

At Ai.Fish our goal is to use artificial intelligence and machine learning to improve marine conservation and ocean science, and EM is currently one of our top priorities. We are based on Oahu, where we were initially introduced to EM by longline captains, and we learned more through conversations with folks at the Pacific Islands Fisheries Science Center (PIFSC).

Hawaii’s longline fishery comprises 145 active deep-set tuna vessels and 18 shallow-set vessels targeting swordfish. EM has been trialed in the region through pilot projects within PIFSC as well as through the NFWF EMR program. Today there are 18 vessels equipped with EM systems in the fishery, which boasts an annual landed value of over $100 million. Target observer coverage in the deep-set fishery is 20%, while coverage in the shallow-set fishery is 100%.

AI and Its Challenges

What exactly does “artificial intelligence” mean? The term is broad, and refers to a wide range of decision-making algorithms that mimic human intelligence. Much of the hype around AI concerns a subfield called “machine learning,” which designs algorithms that automatically get better over time as they are exposed to additional data. In the context of EM, potential uses are numerous; from catch accounting to traceability, almost any stage of the EM lifecycle in which decisions are made based on data can be improved by machine learning algorithms.

Our project focused on a subfield of machine learning called “computer vision,” in which the data of interest consists of images or video. The end goal of a computer vision system for EM is to perform or assist tasks typically performed by human reviewers on land. These tasks mostly involve catch accounting, including identification of catch events, species classification, discard status, identification of bycatch and protected species interactions, etc. Current review processes are quite inefficient, as reviewers spend a majority of their time scanning mostly-empty video looking for periods of relevant activity. Even partial automation of this process could save reviewers thousands of hours of work per year.

Building a system to automatically perform these tasks is not easy, however. Existing machine learning methods have been developed for highly specific and simple tasks, on top of which additional logic is needed to do anything useful. For example, a common computer vision task relevant to EM is called “object detection,” in which a machine learning model is taught to locate objects of interest. In the context of EM, these objects include fish, humans, and gear within an image. If trained with enough data these models actually do quite well at this task, but this is just the first step to performing actual catch accounting. Take the following sequence of images, for example.

These images are frames of video taken from a typical catch event onboard a longline vessel. The overlaid colored rectangles were automatically rendered by a machine learning model trained to detect fish. As you can see, results vary significantly across these similar frames, outputting anywhere from 1-3 fish detections throughout the sequence. This AI model does not interpret the content of an image in the same way a person does, it simply recognizes patterns in pixels which correlate with the presence of a fish. So while a person would reason that both fish have remained stationary, the naive machine learning model is confused by the changes in appearance caused by movement of the fisherman.

Complex additional logic is needed to deal with these misidentifications in a way that generalizes broadly. Keep in mind that catch accounting involves much more than simply locating fish in an image. Once this can be done accurately, there remains the task of keeping track of all these fish throughout their time onboard. In computer vision, this task is called “object tracking,” and it is a very challenging and active research area. Even state-of-the-art methods for object tracking do quite poorly with EM video data, often double- or triple-counting catch events due to the complex interactions between crew and catch. Due to these challenges, we believe full automation of the catch accounting process is still several years away, but large practical gains can be made even with the imperfect methods available today.

Results

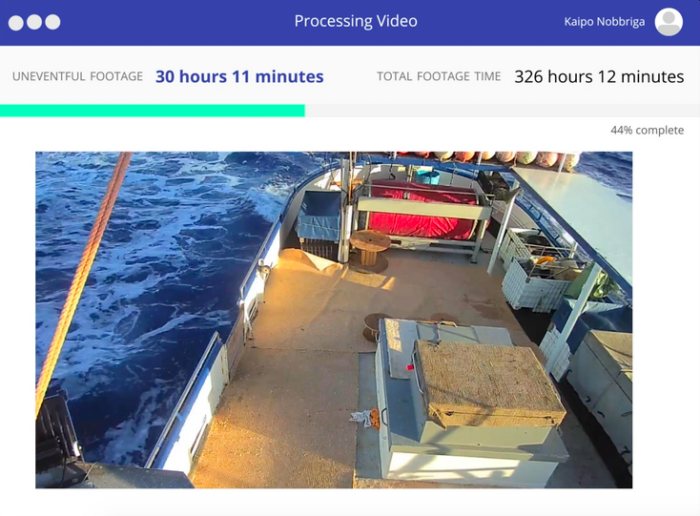

Our first key goal for this project was to demonstrate the practical uses of machine learning that can be adopted right now to improve the EM video review process. Based on stakeholder feedback, our major focus was on reducing the amount of time spent sifting through uneventful video in search of catch events. EM systems often record video 24 hours a day, or use simple motion detection algorithms to turn the camera on when there is activity onboard. In either case, typically 5% or less of this video contains relevant fishing activity. We trained a machine learning model to detect longline crew activity for the purpose of identifying sets and hauls, as well as to detect segments of video containing fishing activity. This can be used to eliminate uneventful segments of video, greatly reducing the amount of footage requiring review. To enable rapid data processing we then engineered a system to analyze the video on a distributed collection of cloud-based servers. Finally, in order to make the system accessible and to provide stakeholders with updates about the processing of their trips, we designed a user interface for easy upload and data processing.

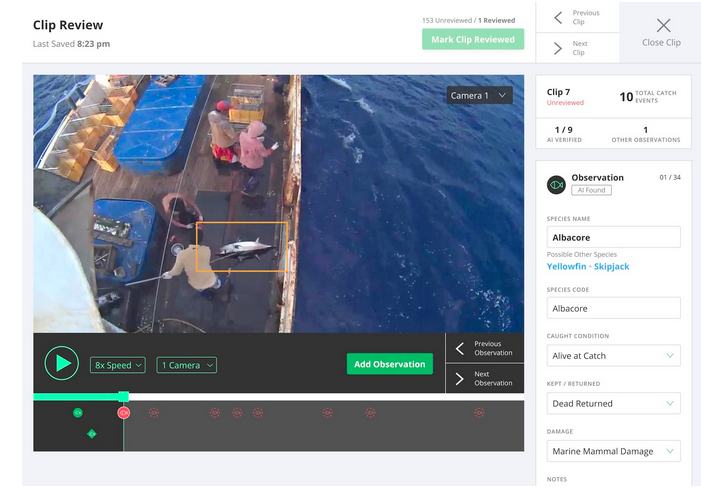

Our second goal was focused more on the near-future of machine learning for EM, and involved prototyping a user interface and software workflow to assist reviewers using AI output. This software envisions a workflow for reviewers built around automated AI processing from the ground up, creating a “human-in-the-loop” review system for efficient data processing. It assumes that the machine learning system has the capability to identify catch events and predict fish species. The key task of the human reviewer is then to corroborate these identifications and add additional information. The goal is to eliminate most of the tedious work and harness the expertise of the reviewer for the most difficult tasks.

One of the most rewarding parts of this project was working with reviewers to obtain feedback about the interface (entirely via video chat, due to Covid-19). Though our design intuitions proved generally correct, it was very instructive to observe potential users interacting with initial prototypes, exposing any hiccups in the workflow. And of course it was exciting to watch this workflow improve over time, thanks to their feedback.

One advantage to designing our interface around a backend machine learning system is that the experts’ input can be easily fed back into the model to improve its future results. Any time the machine learning predictions are incorrect, the corrected output will be added to its training data, and it will be less likely to repeat the mistake. As time goes on, the system gets better and better at assisting the reviewers in their work.

This work was made possible thanks to generous contributions from a broad group of stakeholders in the Hawaii fishery. In order to train these machine learning models, we collected data directly from captains in the fishery as well as through EM service providers, including data from a previously funded NFWF EMR project in Hawaii. Through partnerships with Google and Amazon we were able to develop and train a multitude of large machine learning models in the cloud, a process that would otherwise have been cost-prohibitive. And we conducted iterative user testing and received feedback on our interface designs from employees at PIFSC, EM service providers, professional reviewers, and onboard observers.

Future work

We are very optimistic about the future of machine learning in EM. An ability to perform some machine learning processing onboard the vessel, coupled with new wireless data transmission technology, could help streamline the EM review process significantly. Since processing power aboard vessels will be inherently limited compared to a cloud processing service, we recommend that onboard systems focus initially on reducing the amount of data requiring transmission rather than attempting to perform full catch accounting. This reduction will help to make wireless data transmission practical, eliminating the inefficiencies of mailing hard drives to service providers. We’re working to reduce power requirements of our models in order to make them deployable on low-cost onboard systems for this task.

Significant R&D will be required to develop systems which can partially or fully automate the catch accounting process. Projects in this area will be high-risk, high-reward, as they will be working on the cutting-edge of machine learning algorithm development. We recommend the EM community engage with the machine learning research community to develop EM-related benchmarks in order to motivate academic or industrial research. One example of this is fishnet.ai, the longline object detection dataset created by The Nature Conservancy. Development of additional datasets, such as a video dataset for onboard fish tracking, or data collected from different vessel types, will help spur innovation in this area.

Finally we hope to see continued and expanded coordination between policymakers and AI experts. We’ve already seen this happen through successful workshops such as the Integrating Machine Learning and Electronic Monitoring workshop in the northeast. In order to fully realize the benefits of AI, it is important that policies take into account its current capabilities, strengths, and weaknesses. Similarly, machine learning systems must be designed around specific policy goals, ensure appropriate levels of privacy and confidentiality, and build trust with the fisheries community. As the policies around AI are still in their infancy, it is important to coordinate between stakeholders in these technologies as early as possible to avoid technical debt – defined as additional rework down the road caused by poor design decisions made early on.

We are extremely grateful to NFWF for their support and look forward to continuing to develop beneficial AI for EM. We are currently seeking funding and partnerships to help us scale the system developed through this EMR grant to the stage of production deployment. We are also seeking to expand our portfolio of projects in EM through public and private partnerships, so please reach out to us at contact@ai.fish.