CVision AI is a small company of software engineers, data scientists, and machine learning experts, focused on delivering software solutions for video analytics at scale. Our work spans several domains, but we are primarily focused on the marine sciences. With a dedication to open-source solutions, adoption of cutting edge vision algorithms, and a relentless focus on customer use cases, CVision has developed a reputation as a reliable partner in analyzing large volumes of video data that are generated in exploring and monitoring our oceans. Our tagline, “From Imagery to Insight” is built into everything we do, from designing camera solutions and efficient data collection processes, to enabling collaborative analysis by geographically dispersed teams or automating video review.

My co-founder Jonathan Takahashi and I launched our careers in the defense industry, where we learned signal processing, algorithm development, and object detection for mission-critical software systems. With our combined 25 years’ experience, and backed by the highly skilled CVision AI team, we serve as comprehensive solution providers for our clients.

As Jonathan and I were starting out, we quickly found that the problems we faced were shared by much larger organizations. How can we share video data and annotate it collaboratively? How can we deploy the algorithms we develop so our customers can use them easily? The tools we needed for real, large-scale computer vision applications just didn’t exist, so we set out to create them.

Our projects span ocean exploration, EM, and in between. Below are several examples.

Building an image library for fisheries

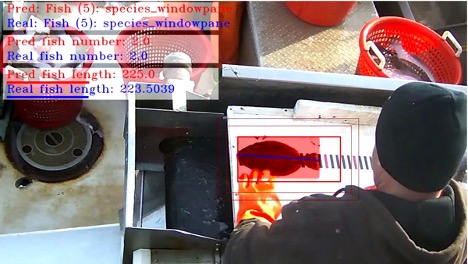

The aim of this project was to develop a training data set for artificial intelligence (AI) algorithms by leveraging existing at-sea fish processing that occurs as part of semi-annual fish trawl surveys conducted by NOAA. This survey samples large quantities of fish caught by vessels in the Northeast Multispecies Groundfish Fishery and supplies expert species identifications.

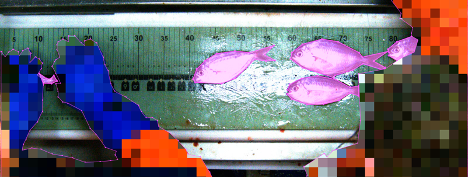

NOAA contracted with us to install computer vision cameras in the wet lab on the Henry B. Bigelow in collaboration with New England Marine Monitoring (NEMM). Over the course of a year, 600 hours of video was collected from four sampling stations. Data was annotated and an object detection and tracking algorithm was developed. After inference, the dataset contains 45 million bounding boxes around individual fish, labeled by species using ground truth data from NOAA’s Fisheries Scientific Computing System (FSCS).

CVision continues to collect data from the survey twice a year, recently upgrading to include stereo systems that enrich the data set further, and expanding the set of foundational algorithms that can be utilized as part of an AI strategy for EM in the Northeast.

Shoresight – Estimating recreational fishing effort from vessel counts

CVision AI has successfully developed Shoresight, a technology that enables intelligent video capture in demanding marine environments. By utilizing rugged and flexible IP security cameras that wirelessly upload data to a secure cloud platform, we have overcome the challenges associated with these settings.

Shoresight video footage is compressed using advanced codecs, ensuring optimal bandwidth usage without compromising quality while allowing for extended capture periods. Although recorded content can be reviewed by experts, we’ve implemented cutting-edge machine vision and artificial intelligence algorithms to alleviate the workload of busy staff members. Our AI algorithms have demonstrated accuracy comparable to human annotators in various scenarios throughout the continental United States, particularly when weather conditions are favorable, and the captured views are well-defined.

Tator – Video analytics at scale

One of the first pieces of software that CVision ever built was a video annotation tool called Tator. This desktop app focused on video annotation of large videos at a time when most tools were

focused on imagery. In 2019, we realized that to help our customers organize, share, and analyze their data using the algorithms we developed, we needed an online tool. Tator Online (now just Tator – https://github.com/cvisionai/tator) was born. At that point NOAA funded development of Tator through a Phase I and

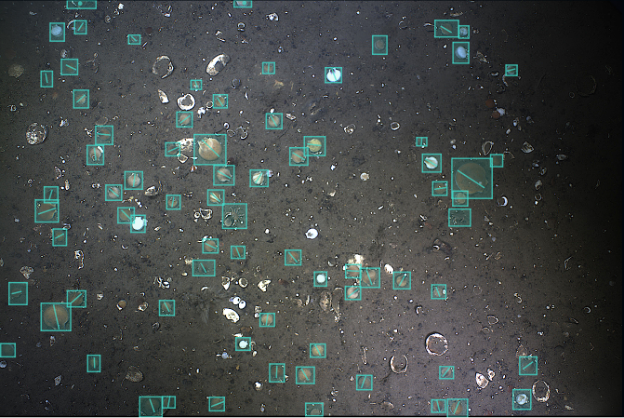

Phase II SBIR, and we have since transitioned it to a successful open-source project, offered as a managed service or as an Enterprise product. Tator is a flexible tool, used for video analysis of underwater footage, but also as a review tool for EM programs in multiple fisheries. One of the first use cases for Tator was to develop and deploy algorithms for the University of Massachusetts School of Marine Science and Technology (SMAST), in conjunction with Professor Kevin Stokesbury’s lab. These efforts focused on groundfish trawl surveys and scallop surveys.

Example video: Multi-object tracking on New England Groundfish

N+1 Fish, N+2 Fish: A data science competition

CVision AI partnered with Kate Wing of the databranch, DrivenData, the Gulf of Maine Research Institute (GMRI), The Nature Conservancy, and researcher Joseph Paul Cohen, PhD of the Montreal Institute for Learning Algorithms to design a data science competition to help bring attention to and begin to reduce the burden of human video review for fisheries management.

GMRI provided hundreds of hours of video data, with loosely correlated annotations for species type and length. Using Tator, our team was able to create a data set that had frame accurate annotations with localization annotations for competitors to use. Working with Dr. Cohen, we then designed a novel competition score metric using multiple objectives: count, species ID, and length measurement. The results of the competition became the basis for an open source library for electronic monitoring, which we called OpenEM.

Looking Forward – Foundational Models and Data Engines

The field of machine learning and computer vision is moving at an ever-faster pace. Roughly a decade ago, Convolutional Neural Networks burst onto the scene, establishing Deep Learning as the dominant technology for automating visual analysis. These algorithms required vast amounts of annotated data and a time-consuming process made even more so as the types of annotations increased in complexity. Recently, Transformers have redefined the AI space, as ChatGPT and similar tools dominate the headlines. Similarly, Vision Transformers are making incredible strides in visual analysis. Tools such as Segment Anything from Meta have provided new state-of-the-art tools for assisted annotation, dramatically reducing the effort required in collecting the data needed to drive AI adoption in EM. CVision has moved to implement these tools into our customers’ workflows, including integration into our flagship Tator platform. As these open-source tools continue to evolve, we can’t wait to see how they impact the world of EM.

Ben Woodward is CEO of CVIsion AI. He welcomes your comments or inquiries about his work and that of his team.